Chapter 14 The General Linear Model

Remember that early in the book we described the basic model of statistics:

\[ data = model + error \] where our general goal is to find the model that minimizes the error, subject to some other constraints (such as keeping the model relatively simple so that we can generalize beyond our specific dataset). In this chapter we will focus on a particular implementation of this approach, which is known as the general linear model (or GLM). You have already seen the general linear model in the earlier chapter on Fitting Models to Data, where we modeled height in the NHANES dataset as a function of age; here we will provide a more general introduction to the concept of the GLM and its many uses. Nearly every model used in statistics can be framed in terms of the general linear model or an extension of it.

Before we discuss the general linear model, let’s first define two terms that will be important for our discussion:

- dependent variable: This is the outcome variable that our model aims to explain (usually referred to as Y)

- independent variable: This is a variable that we wish to use in order to explain the dependent variable (usually referred to as X).

There may be multiple independent variables, but for this course we will focus primarily on situations where there is only one dependent variable in our analysis.

A general linear model is one in which the model for the dependent variable is composed of a linear combination of independent variables that are each multiplied by a weight (which is often referred to as the Greek letter beta - \(\beta\)), which determines the relative contribution of that independent variable to the model prediction.

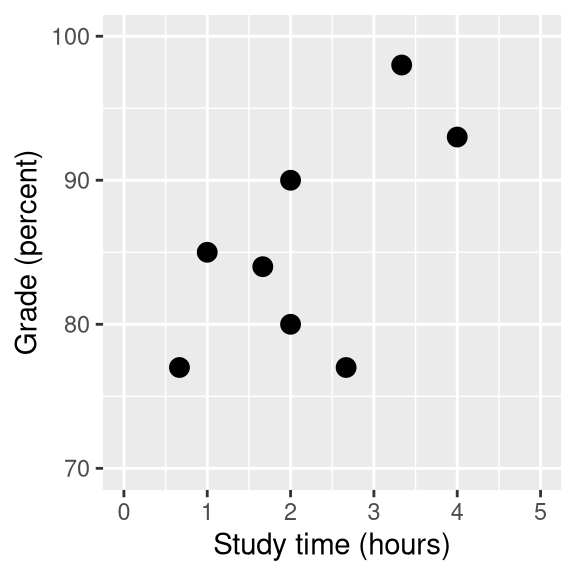

Figure 14.1: Relation between study time and grades

As an example, let’s generate some simulated data for the relationship between study time and exam grades (see Figure 14.1). Given these data, we might want to engage in each of the three fundamental activities of statistics:

- Describe: How strong is the relationship between grade and study time?

- Decide: Is there a statistically significant relationship between grade and study time?

- Predict: Given a particular amount of study time, what grade do we expect?

In the previous chapter we learned how to describe the relationship between two variables using the correlation coefficient. Let’s use our statistical software to compute that relationship for these data and test whether the correlation is significantly different from zero:

##

## Pearson's product-moment correlation

##

## data: df$grade and df$studyTime

## t = 2, df = 6, p-value = 0.09

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## -0.13 0.93

## sample estimates:

## cor

## 0.63The correlation is quite high, but notice that the confidence interval around the estimate is very wide, spanning nearly the entire range from zero to one, which is due in part to the small sample size.

14.1 Linear regression

We can use the general linear model to describe the relation between two variables and to decide whether that relationship is statistically significant; in addition, the model allows us to predict the value of the dependent variable given some new value(s) of the independent variable(s). Most importantly, the general linear model will allow us to build models that incorporate multiple independent variables, whereas the correlation coefficient can only describe the relationship between two individual variables.

The specific version of the GLM that we use for this is referred to as as linear regression. The term regression was coined by Francis Galton, who had noted that when he compared parents and their children on some feature (such as height), the children of extreme parents (i.e. the very tall or very short parents) generally fell closer to the mean than did their parents. This is an extremely important point that we return to below.

The simplest version of the linear regression model (with a single independent variable) can be expressed as follows:

\[ y = x * \beta_x + \beta_0 + \epsilon \] The \(\beta_x\) value tells us how much we would expect y to change given a one-unit change in \(x\). The intercept \(\beta_0\) is an overall offset, which tells us what value we would expect y to have when \(x=0\); you may remember from our early modeling discussion that this is important to model the overall magnitude of the data, even if \(x\) never actually attains a value of zero. The error term \(\epsilon\) refers to whatever is left over once the model has been fit; we often refer to these as the residuals from the model. If we want to know how to predict y (which we call \(\hat{y}\)) after we estimate the \(\beta\) values, then we can drop the error term:

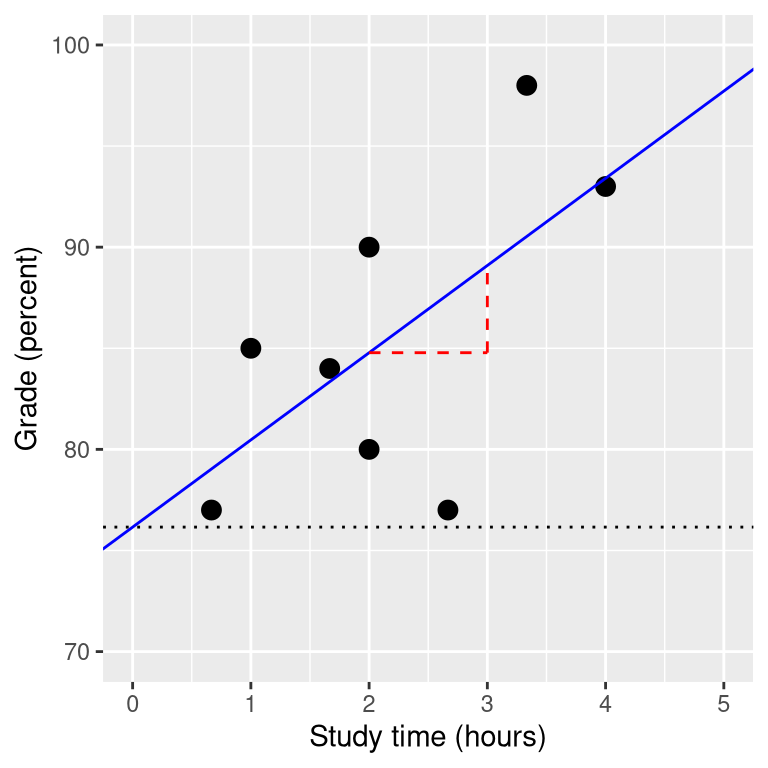

\[ \hat{y} = x * \hat{\beta_x} + \hat{\beta_0} \] Note that this is simply the equation for a line, where \(\hat{\beta_x}\) is our estimate of the slope and \(\hat{\beta_0}\) is the intercept. Figure 14.2 shows an example of this model applied to the study time data.

Figure 14.2: The linear regression solution for the study time data is shown in the solid line The value of the intercept is equivalent to the predicted value of the y variable when the x variable is equal to zero; this is shown with a dotted line. The value of beta is equal to the slope of the line – that is, how much y changes for a unit change in x. This is shown schematically in the dashed lines, which show the degree of increase in grade for a single unit increase in study time.

We will not go into the details of how the best fitting slope and intercept are actually estimated from the data; if you are interested, details are available in the Appendix.

14.1.1 Regression to the mean

The concept of regression to the mean was one of Galton’s essential contributions to science, and it remains a critical point to understand when we interpret the results of experimental data analyses. Let’s say that we want to study the effects of a reading intervention on the performance of poor readers. To test our hypothesis, we might go into a school and recruit those individuals in the bottom 25% of the distribution on some reading test, administer the intervention, and then examine their performance on the test after the intervention. Let’s say that the intervention actually has no effect, such that reading scores for each individual are simply independent samples from a normal distribution. Results from a computer simulation of this hypothetic experiment are presented in Table 14.1.

| Score | |

|---|---|

| Test 1 | 88 |

| Test 2 | 101 |

If we look at the difference between the mean test performance at the first and second test, it appears that the intervention has helped these students substantially, as their scores have gone up by more than ten points on the test! However, we know that in fact the students didn’t improve at all, since in both cases the scores were simply selected from a random normal distribution. What has happened is that some students scored badly on the first test simply due to random chance. If we select just those subjects on the basis of their first test scores, they are guaranteed to move back towards the mean of the entire group on the second test, even if there is no effect of training. This is the reason that we always need an untreated control group in order to interpret any changes in performance due to an intervention; otherwise we are likely to be tricked by regression to the mean. In addition, the participants need to be randomly assigned to the control or treatment group, so that there won’t be any systematic differences between the groups (on average).

14.1.2 The relation between correlation and regression

There is a close relationship between correlation coefficients and regression coefficients. Remember that Pearson’s correlation coefficient is computed as the ratio of the covariance and the product of the standard deviations of x and y:

\[ \hat{r} = \frac{covariance_{xy}}{s_x * s_y} \] whereas the regression beta for x is computed as:

\[ \hat{\beta_x} = \frac{covariance_{xy}}{s_x*s_x} \]

Based on these two equations, we can derive the relationship between \(\hat{r}\) and \(\hat{beta}\):

\[ covariance_{xy} = \hat{r} * s_x * s_y \]

\[ \hat{\beta_x} = \frac{\hat{r} * s_x * s_y}{s_x * s_x} = r * \frac{s_y}{s_x} \] That is, the regression slope is equal to the correlation value multiplied by the ratio of standard deviations of y and x. One thing this tells us is that when the standard deviations of x and y are the same (e.g. when the data have been converted to Z scores), then the correlation estimate is equal to the regression slope estimate.

14.1.3 Standard errors for regression models

If we want to make inferences about the regression parameter estimates, then we also need an estimate of their variability. To compute this, we first need to compute the residual variance or error variance for the model – that is, how much variability in the dependent variable is not explained by the model. We can compute the model residuals as follows:

\[ residual = y - \hat{y} = y - (x*\hat{\beta_x} + \hat{\beta_0}) \] We then compute the sum of squared errors (SSE):

\[ SS_{error} = \sum_{i=1}^n{(y_i - \hat{y_i})^2} = \sum_{i=1}^n{residuals^2} \] and from this we compute the mean squared error:

\[ MS_{error} = \frac{SS_{error}}{df} = \frac{\sum_{i=1}^n{(y_i - \hat{y_i})^2} }{N - p} \] where the degrees of freedom (\(df\)) are determined by subtracting the number of estimated parameters (2 in this case: \(\hat{\beta_x}\) and \(\hat{\beta_0}\)) from the number of observations (\(N\)). Once we have the mean squared error, we can compute the standard error for the model as:

\[ SE_{model} = \sqrt{MS_{error}} \]

In order to get the standard error for a specific regression parameter estimate, \(SE_{\beta_x}\), we need to rescale the standard error of the model by the square root of the sum of squares of the X variable:

\[ SE_{\hat{\beta}_x} = \frac{SE_{model}}{\sqrt{{\sum{(x_i - \bar{x})^2}}}} \]

14.1.4 Statistical tests for regression parameters

Once we have the parameter estimates and their standard errors, we can compute a t statistic to tell us the likelihood of the observed parameter estimates compared to some expected value under the null hypothesis. In this case we will test against the null hypothesis of no effect (i.e. \(\beta=0\)):

\[ \begin{array}{c} t_{N - p} = \frac{\hat{\beta} - \beta_{expected}}{SE_{\hat{\beta}}}\\ t_{N - p} = \frac{\hat{\beta} - 0}{SE_{\hat{\beta}}}\\ t_{N - p} = \frac{\hat{\beta} }{SE_{\hat{\beta}}} \end{array} \]

In general we would use statistical software to compute these rather than computing them by hand. Here are the results from the linear model function in R:

##

## Call:

## lm(formula = grade ~ studyTime, data = df)

##

## Residuals:

## Min 1Q Median 3Q Max

## -10.656 -2.719 0.125 4.703 7.469

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 76.16 5.16 14.76 6.1e-06 ***

## studyTime 4.31 2.14 2.01 0.091 .

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 6.4 on 6 degrees of freedom

## Multiple R-squared: 0.403, Adjusted R-squared: 0.304

## F-statistic: 4.05 on 1 and 6 DF, p-value: 0.0907In this case we see that the intercept is significantly different from zero (which is not very interesting) and that the effect of studyTime on grades is marginally significant (p = .09) – the same p-value as the correlation test that we performed earlier.

14.1.5 Quantifying goodness of fit of the model

Sometimes it’s useful to quantify how well the model fits the data overall, and one way to do this is to ask how much of the variability in the data is accounted for by the model. This is quantified using a value called \(R^2\) (also known as the coefficient of determination). If there is only one x variable, then this is easy to compute by simply squaring the correlation coefficient:

\[ R^2 = r^2 \] In the case of our study time example, \(R^2\) = 0.4, which means that we have accounted for about 40% of the variance in grades.

More generally we can think of \(R^2\) as a measure of the fraction of variance in the data that is accounted for by the model, which can be computed by breaking the variance into multiple components:

THIS IS CONFUSING, CHANGE TO RESIDUAL RATHER THAN ERROR

\[ SS_{total} = SS_{model} + SS_{error} \] where \(SS_{total}\) is the variance of the data (\(y\)) and \(SS_{model}\) and \(SS_{error}\) are computed as shown earlier in this chapter. Using this, we can then compute the coefficient of determination as:

\[ R^2 = \frac{SS_{model}}{SS_{total}} = 1 - \frac{SS_{error}}{SS_{total}} \]

A small value of \(R^2\) tells us that even if the model fit is statistically significant, it may only explain a small amount of information in the data.

14.2 Fitting more complex models

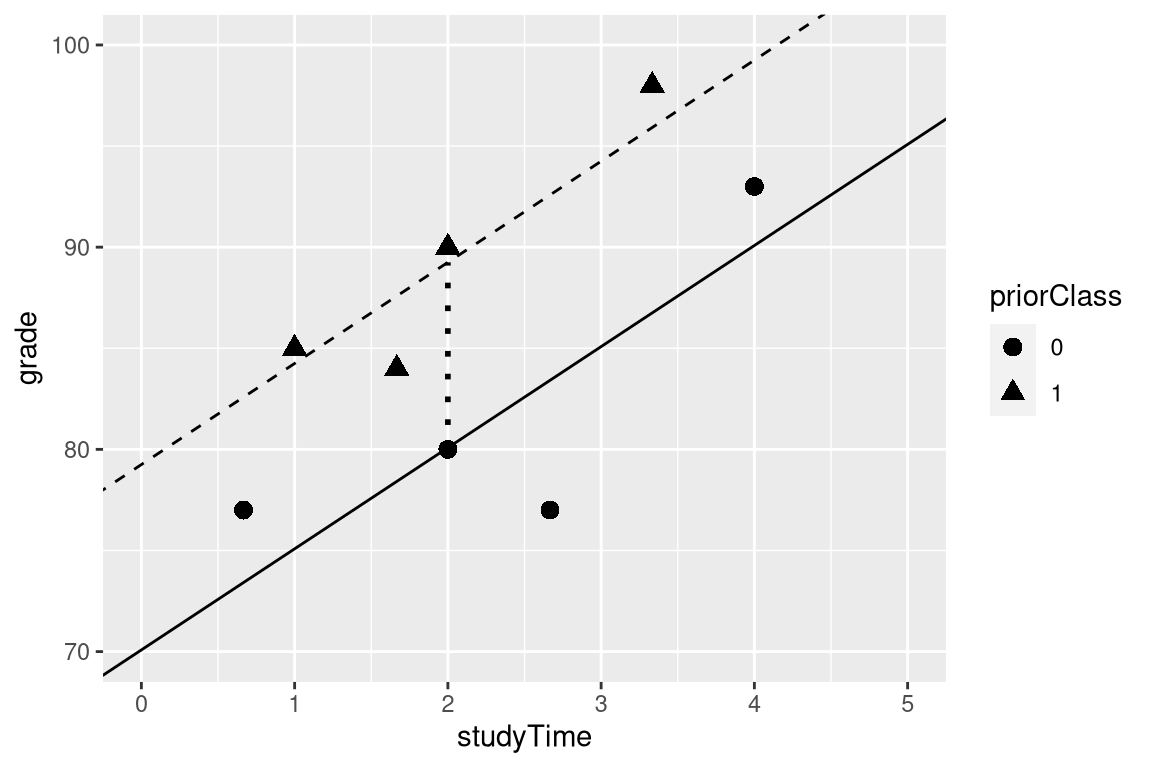

Often we would like to understand the effects of multiple variables on some particular outcome, and how they relate to one another. In the context of our study time example, let’s say that we discovered that some of the students had previously taken a course on the topic. If we plot their grades (see Figure 14.3), we can see that those who had a prior course perform much better than those who had not, given the same amount of study time. We would like to build a statistical model that takes this into account, which we can do by extending the model that we built above:

\[ \hat{y} = \hat{\beta_1}*studyTime + \hat{\beta_2}*priorClass + \hat{\beta_0} \] To model whether each individual has had a previous class or not, we use what we call dummy coding in which we create a new variable that has a value of one to represent having had a class before, and zero otherwise. This means that for people who have had the class before, we will simply add the value of \(\hat{\beta_2}\) to our predicted value for them – that is, using dummy coding \(\hat{\beta_2}\) simply reflects the difference in means between the two groups. Our estimate of \(\hat{\beta_1}\) reflects the regression slope over all of the data points – we are assuming that regression slope is the same regardless of whether someone has had a class before (see Figure 14.3).

##

## Call:

## lm(formula = grade ~ studyTime + priorClass, data = df)

##

## Residuals:

## 1 2 3 4 5 6 7 8

## 3.5833 0.7500 -3.5833 -0.0833 0.7500 -6.4167 2.0833 2.9167

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 70.08 3.77 18.60 8.3e-06 ***

## studyTime 5.00 1.37 3.66 0.015 *

## priorClass1 9.17 2.88 3.18 0.024 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 4 on 5 degrees of freedom

## Multiple R-squared: 0.803, Adjusted R-squared: 0.724

## F-statistic: 10.2 on 2 and 5 DF, p-value: 0.0173

Figure 14.3: The relation between study time and grade including prior experience as an additional component in the model. The solid line relates study time to grades for students who have not had prior experience, and the dashed line relates grades to study time for students with prior experience. The dotted line corresponds to the difference in means between the two groups.

14.3 Interactions between variables

In the previous model, we assumed that the effect of study time on grade (i.e., the regression slope) was the same for both groups. However, in some cases we might imagine that the effect of one variable might differ depending on the value of another variable, which we refer to as an interaction between variables.

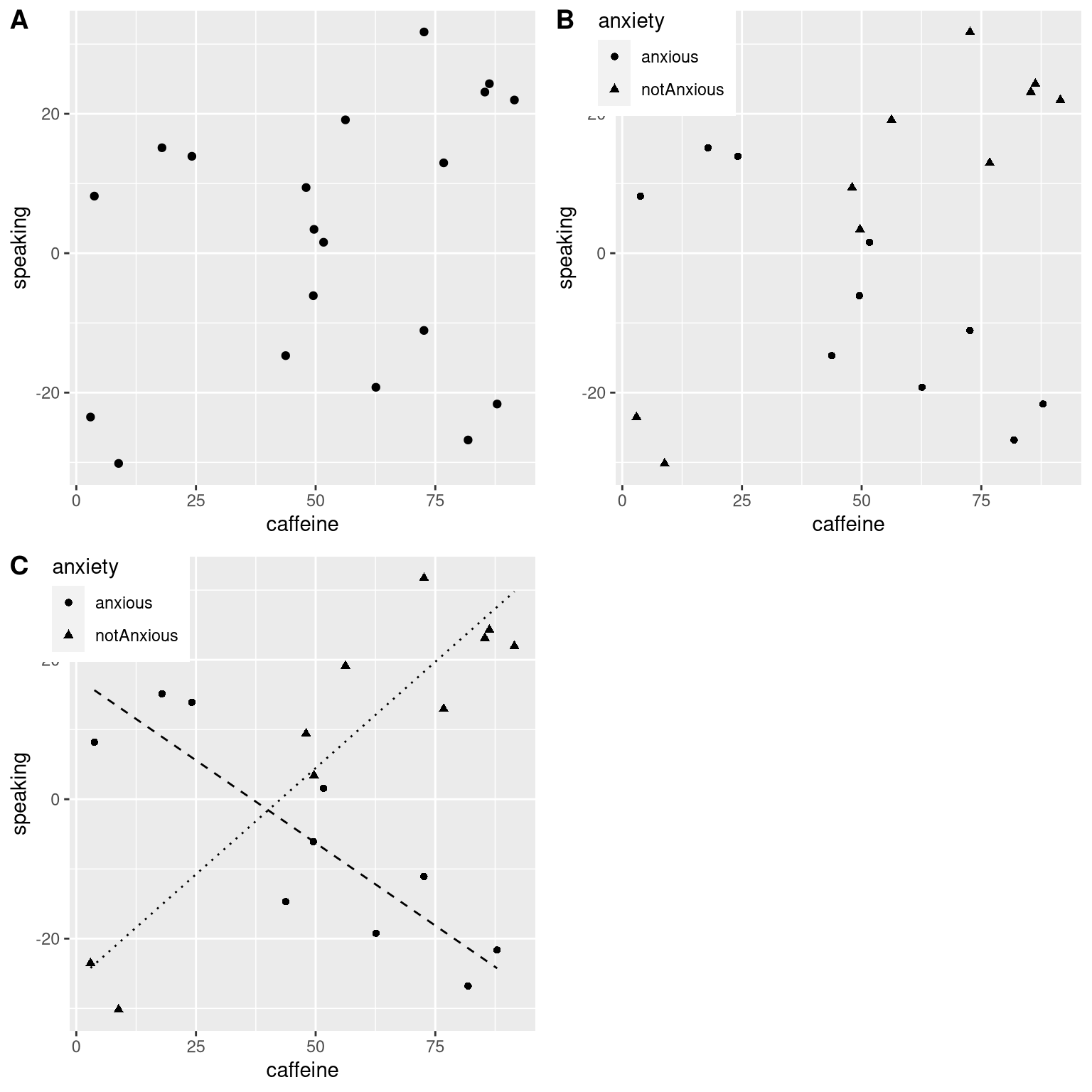

Let’s use a new example that asks the question: What is the effect of caffeine on public speaking? First let’s generate some data and plot them. Looking at panel A of Figure 14.4, there doesn’t seem to be a relationship, and we can confirm that by performing linear regression on the data:

##

## Call:

## lm(formula = speaking ~ caffeine, data = df)

##

## Residuals:

## Min 1Q Median 3Q Max

## -33.10 -16.02 5.01 16.45 26.98

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -7.413 9.165 -0.81 0.43

## caffeine 0.168 0.151 1.11 0.28

##

## Residual standard error: 19 on 18 degrees of freedom

## Multiple R-squared: 0.0642, Adjusted R-squared: 0.0122

## F-statistic: 1.23 on 1 and 18 DF, p-value: 0.281But now let’s say that we find research suggesting that anxious and non-anxious people react differently to caffeine. First let’s plot the data separately for anxious and non-anxious people.

As we see from panel B in Figure 14.4, it appears that the relationship between speaking and caffeine is different for the two groups, with caffeine improving performance for people without anxiety and degrading performance for those with anxiety. We’d like to create a statistical model that addresses this question. First let’s see what happens if we just include anxiety in the model.

##

## Call:

## lm(formula = speaking ~ caffeine + anxiety, data = df)

##

## Residuals:

## Min 1Q Median 3Q Max

## -32.97 -9.74 1.35 10.53 25.36

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -12.581 9.197 -1.37 0.19

## caffeine 0.131 0.145 0.91 0.38

## anxietynotAnxious 14.233 8.232 1.73 0.10

##

## Residual standard error: 18 on 17 degrees of freedom

## Multiple R-squared: 0.204, Adjusted R-squared: 0.11

## F-statistic: 2.18 on 2 and 17 DF, p-value: 0.144Here we see there are no significant effects of either caffeine or anxiety, which might seem a bit confusing. The problem is that this model is trying to use the same slope relating speaking to caffeine for both groups. If we want to fit them using lines with separate slopes, we need to include an interaction in the model, which is equivalent to fitting different lines for each of the two groups; this is often denoted by using the \(*\) symbol in the model.

##

## Call:

## lm(formula = speaking ~ caffeine + anxiety + caffeine * anxiety,

## data = df)

##

## Residuals:

## Min 1Q Median 3Q Max

## -11.385 -7.103 -0.444 6.171 13.458

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 17.4308 5.4301 3.21 0.00546 **

## caffeine -0.4742 0.0966 -4.91 0.00016 ***

## anxietynotAnxious -43.4487 7.7914 -5.58 4.2e-05 ***

## caffeine:anxietynotAnxious 1.0839 0.1293 8.38 3.0e-07 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 8.1 on 16 degrees of freedom

## Multiple R-squared: 0.852, Adjusted R-squared: 0.825

## F-statistic: 30.8 on 3 and 16 DF, p-value: 7.01e-07From these results we see that there are significant effects of both caffeine and anxiety (which we call main effects) and an interaction between caffeine and anxiety. Panel C in Figure 14.4 shows the separate regression lines for each group.

Figure 14.4: A: The relationship between caffeine and public speaking. B: The relationship between caffeine and public speaking, with anxiety represented by the shape of the data points. C: The relationship between public speaking and caffeine, including an interaction with anxiety. This results in two lines that separately model the slope for each group (dashed for anxious, dotted for non-anxious).

One important point to note is that we have to be very careful about interpreting a significant main effect if a significant interaction is also present, since the interaction suggests that the main effect differs according to the values of another variable, and thus is not easily interpretable.

Sometimes we want to compare the relative fit of two different models, in order to determine which is a better model; we refer to this as model comparison. For the models above, we can compare the goodness of fit of the model with and without the interaction, using what is called an analysis of variance:

## Analysis of Variance Table

##

## Model 1: speaking ~ caffeine + anxiety

## Model 2: speaking ~ caffeine + anxiety + caffeine * anxiety

## Res.Df RSS Df Sum of Sq F Pr(>F)

## 1 17 5639

## 2 16 1046 1 4593 70.3 3e-07 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This tells us that there is good evidence to prefer the model with the interaction over the one without an interaction. Model comparison is relatively simple in this case because the two models are nested – one of the models is a simplified version of the other model, such that all of the variables in the simpler model are contained in the more complex model. Model comparison with non-nested models can get much more complicated.

14.4 Beyond linear predictors and outcomes

It is important to note that despite the fact that it is called the general linear model, we can actually use the same machinery to model effects that don’t follow a straight line (such as curves). The “linear” in the general linear model doesn’t refer to the shape of the response, but instead refers to the fact that model is linear in its parameters — that is, the predictors in the model only get multiplied the parameters, rather than a nonlinear relationship like being raised to a power of the parameter. It’s also common to analyze data where the outcomes are binary rather than continuous, as we saw in the chapter on categorical outcomes. There are ways to adapt the general linear model (known as generalized linear models) that allow this kind of analysis. We will explore these models later in the book.

14.5 Criticizing our model and checking assumptions

The saying “garbage in, garbage out” is as true of statistics as anywhere else. In the case of statistical models, we have to make sure that our model is properly specified and that our data are appropriate for the model.

When we say that the model is “properly specified”, we mean that we have included the appropriate set of independent variables in the model. We have already seen examples of misspecified models, in Figure 5.3. Remember that we saw several cases where the model failed to properly account for the data, such as failing to include an intercept. When building a model, we need to ensure that it includes all of the appropriate variables.

We also need to worry about whether our model satisfies the assumptions of our statistical methods. One of the most important assumptions that we make when using the general linear model is that the residuals (that is, the difference between the model’s predictions and the actual data) are normally distributed. This can fail for many reasons, either because the model was not properly specified or because the data that we are modeling are inappropriate.

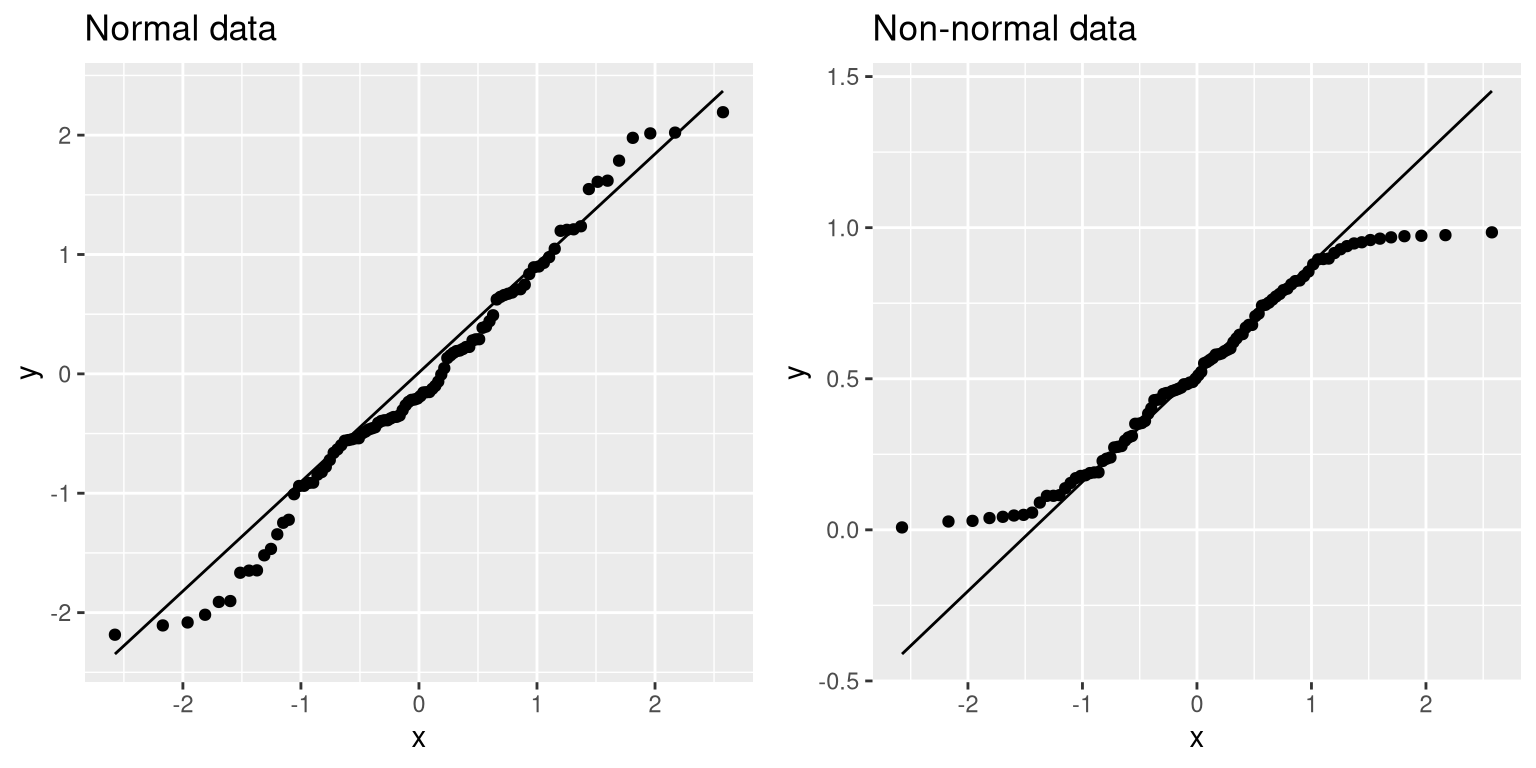

We can use something called a Q-Q (quantile-quantile) plot to see whether our residuals are normally distributed. You have already encountered quantiles — they are the value that cuts off a particular proportion of a cumulative distribution. The Q-Q plot presents the quantiles of two distributions against one another; in this case, we will present the quantiles of the actual data against the quantiles of a normal distribution fit to the same data. Figure 14.5 shows examples of two such Q-Q plots. The left panel shows a Q-Q plot for data from a normal distribution, while the right panel shows a Q-Q plot from non-normal data. The data points in the right panel diverge substantially from the line, reflecting the fact that they are not normally distributed.

qq_df <- tibble(norm=rnorm(100),

unif=runif(100))

p1 <- ggplot(qq_df,aes(sample=norm)) +

geom_qq() +

geom_qq_line() +

ggtitle('Normal data')

p2 <- ggplot(qq_df,aes(sample=unif)) +

geom_qq() +

geom_qq_line()+

ggtitle('Non-normal data')

plot_grid(p1,p2)

Figure 14.5: Q-Q plotsof normal (left) and non-normal (right) data. The line shows the point at which the x and y axes are equal.

Model diagnostics will be explored in more detail in a later chapter.

14.6 What does “predict” really mean?

When we talk about “prediction” in daily life, we are generally referring to the ability to estimate the value of some variable in advance of seeing the data. However, the term is often used in the context of linear regression to refer to the fitting of a model to the data; the estimated values (\(\hat{y}\)) are sometimes referred to as “predictions” and the independent variables are referred to as “predictors”. This has an unfortunate connotation, as it implies that our model should also be able to predict the values of new data points in the future. In reality, the fit of a model to the dataset used to obtain the parameters will nearly always be better than the fit of the model to a new dataset (Copas 1983).

As an example, let’s take a sample of 48 children from NHANES and fit a regression model for weight that includes several regressors (age, height, hours spent watching TV and using the computer, and household income) along with their interactions.

| Data type | RMSE (original data) | RMSE (new data) |

|---|---|---|

| True data | 3.0 | 25 |

| Shuffled data | 7.8 | 59 |

Here we see that whereas the model fit on the original data showed a very good fit (only off by a few kg per individual), the same model does a much worse job of predicting the weight values for new children sampled from the same population (off by more than 25 kg per individual). This happens because the model that we specified is quite complex, since it includes not just each of the individual variables, but also all possible combinations of them (i.e. their interactions), resulting in a model with 32 parameters. Since this is almost as many coefficients as there are data points (i.e., the heights of 48 children), the model overfits the data, just like the complex polynomial curve in our initial example of overfitting in Section 5.4.

Another way to see the effects of overfitting is to look at what happens if we randomly shuffle the values of the weight variable (shown in the second row of the table). Randomly shuffling the value should make it impossible to predict weight from the other variables, because they should have no systematic relationship. The results in the table show that even when there is no true relationship to be modeled (because shuffling should have obliterated the relationship), the complex model still shows a very low error in its predictions on the fitted data, because it fits the noise in the specific dataset. However, when that model is applied to a new dataset, we see that the error is much larger, as it should be.

14.6.1 Cross-validation

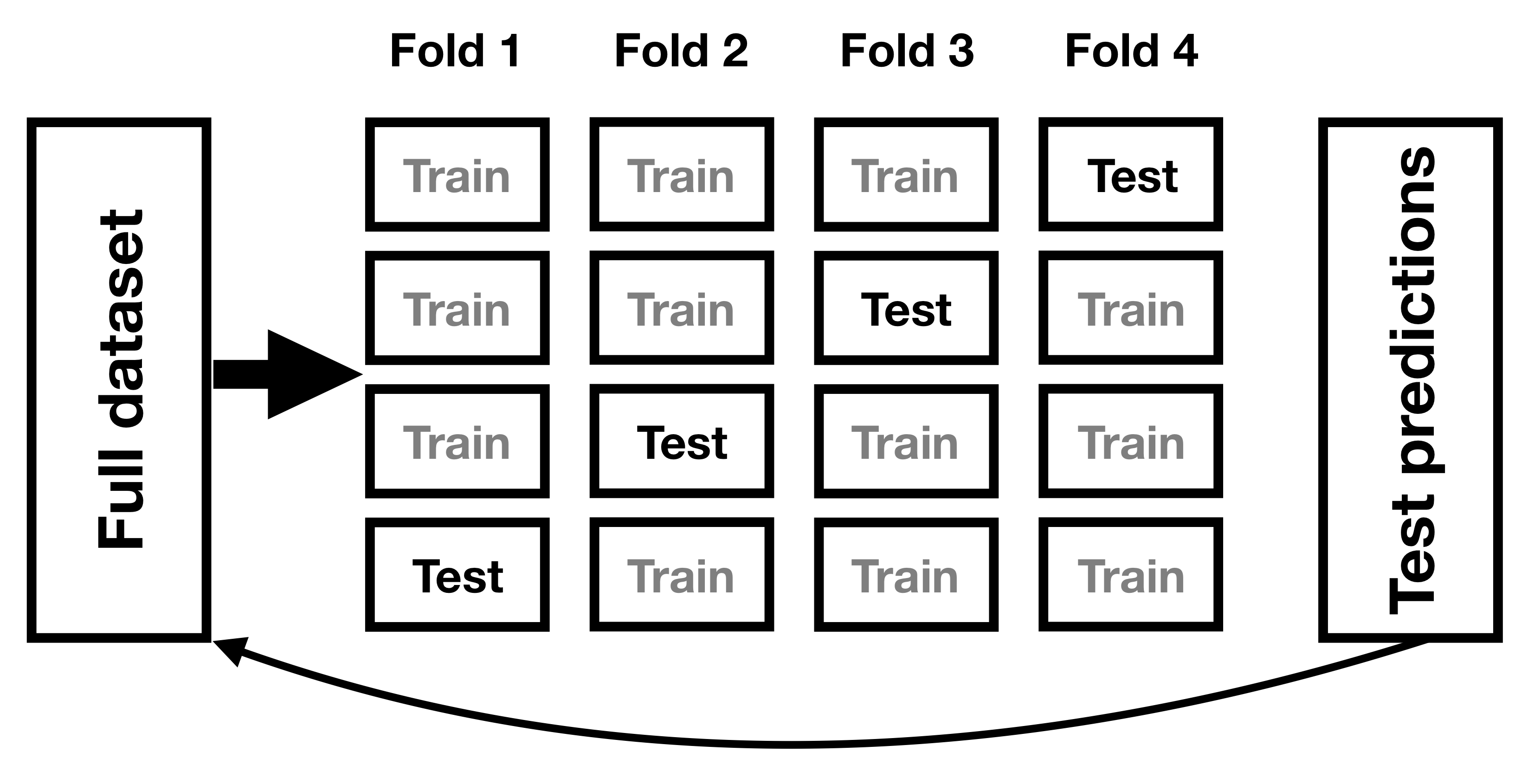

One method that has been developed to help address the problem of overfitting is known as cross-validation. This technique is commonly used within the field of machine learning, which is focused on building models that will generalize well to new data, even when we don’t have a new dataset to test the model. The idea behind cross-validation is that we fit our model repeatedly, each time leaving out a subset of the data, and then test the ability of the model to predict the values in each held-out subset.

Figure 14.6: A schematic of the cross-validation procedure.

Let’s see how that would work for our weight prediction example. In this case we will perform 12-fold cross-validation, which means that we will break the data into 12 subsets, and then fit the model 12 times, in each case leaving out one of the subsets and then testing the model’s ability to accurately predict the value of the dependent variable for those held-out data points. Most statistical software provides tools to apply cross-validation to one’s data. Using this function we can run cross-validation on 100 samples from the NHANES dataset, and compute the RMSE for cross-validation, along with the RMSE for the original data and a new dataset, as we computed above.

| R-squared | |

|---|---|

| Original data | 0.95 |

| New data | 0.34 |

| Cross-validation | 0.60 |

Here we see that cross-validation gives us an estimate of predictive accuracy that is much closer to what we see with a completely new dataset than it is to the inflated accuracy that we see with the original dataset – in fact, it’s even slightly more pessimistic than the average for a new dataset, probably because only part of the data are being used to train each of the models.

Note that using cross-validation properly is tricky, and it is recommended that you consult with an expert before using it in practice. However, this section has hopefully shown you three things:

- “Prediction” doesn’t always mean what you think it means

- Complex models can overfit data very badly, such that one can observe seemingly good prediction even when there is no true signal to predict

- You should view claims about prediction accuracy very skeptically unless they have been done using the appropriate methods.

14.7 Learning objectives

Having read this chapter, you should be able to:

- Describe the concept of linear regression and apply it to a dataset

- Describe the concept of the general linear model and provide examples of its application

- Describe how cross-validation can allow us to estimate the predictive performance of a model on new data

14.8 Suggested readings

- The Elements of Statistical Learning: Data Mining, Inference, and Prediction (2nd Edition) - The “bible” of machine learning methods, available freely online.

14.9 Appendix

14.9.1 Estimating linear regression parameters

We generally estimate the parameters of a linear model from data using linear algebra, which is the form of algebra that is applied to vectors and matrices. If you aren’t familiar with linear algebra, don’t worry – you won’t actually need to use it here, as R will do all the work for us. However, a brief excursion in linear algebra can provide some insight into how the model parameters are estimated in practice.

First, let’s introduce the idea of vectors and matrices; you’ve already encountered them in the context of R, but we will review them here. A matrix is a set of numbers that are arranged in a square or rectangle, such that there are one or more dimensions across which the matrix varies. It is customary to place different observation units (such as people) in the rows, and different variables in the columns. Let’s take our study time data from above. We could arrange these numbers in a matrix, which would have eight rows (one for each student) and two columns (one for study time, and one for grade). If you are thinking “that sounds like a data frame in R” you are exactly right! In fact, a data frame is a specialized version of a matrix, and we can convert a data frame to a matrix using the as.matrix() function.

df <-

tibble(

studyTime = c(2, 3, 5, 6, 6, 8, 10, 12) / 3,

priorClass = c(0, 1, 1, 0, 1, 0, 1, 0)

) %>%

mutate(

grade =

studyTime * betas[1] +

priorClass * betas[2] +

round(rnorm(8, mean = 70, sd = 5))

)

df_matrix <-

df %>%

dplyr::select(studyTime, grade) %>%

as.matrix()We can write the general linear model in linear algebra as follows:

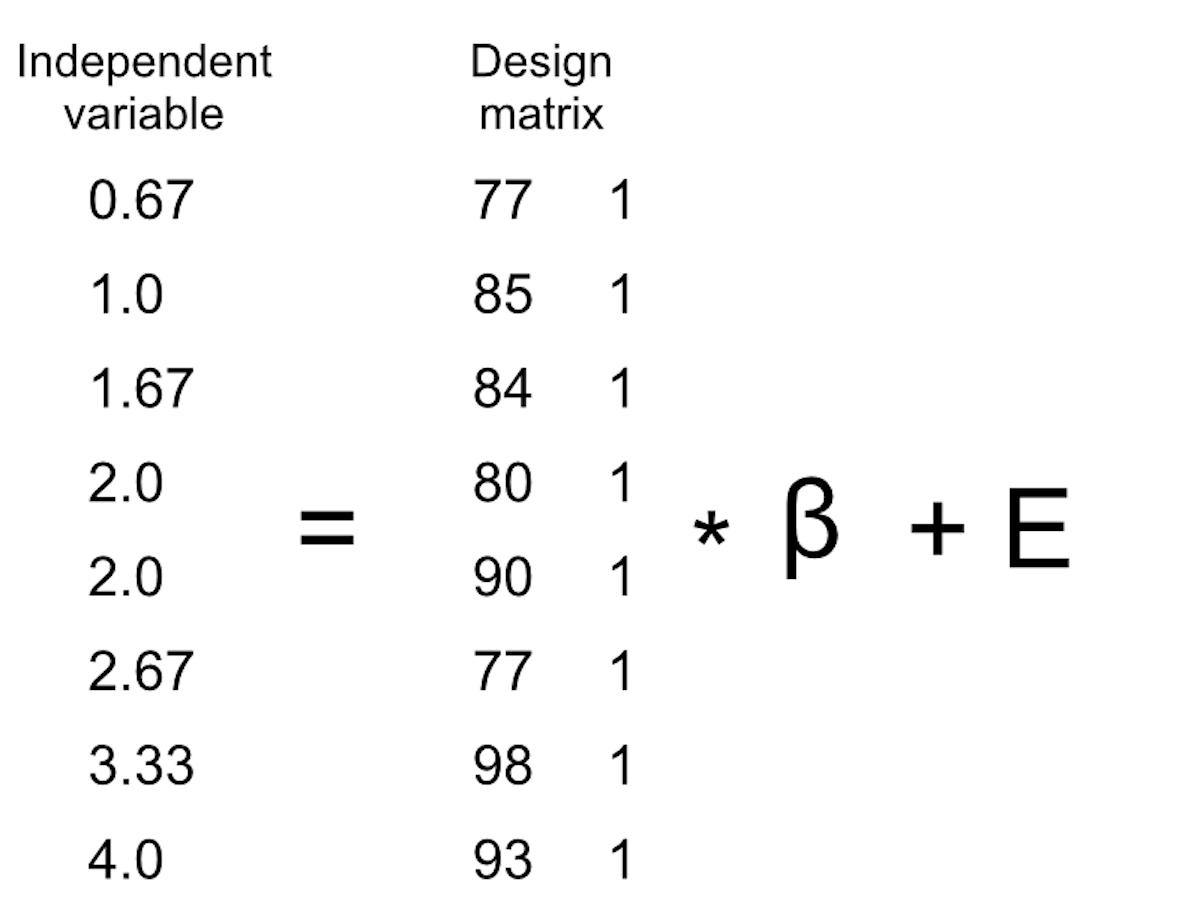

\[ Y = X*\beta + E \] This looks very much like the earlier equation that we used, except that the letters are all capitalized, which is meant to express the fact that they are vectors.

We know that the grade data go into the Y matrix, but what goes into the \(X\) matrix? Remember from our initial discussion of linear regression that we need to add a constant in addition to our independent variable of interest, so our \(X\) matrix (which we call the design matrix) needs to include two columns: one representing the study time variable, and one column with the same value for each individual (which we generally fill with all ones). We can view the resulting design matrix graphically (see Figure 14.7).

Figure 14.7: A depiction of the linear model for the study time data in terms of matrix algebra.

The rules of matrix multiplication tell us that the dimensions of the matrices have to match with one another; in this case, the design matrix has dimensions of 8 (rows) X 2 (columns) and the Y variable has dimensions of 8 X 1. Therefore, the \(\beta\) matrix needs to have dimensions 2 X 1, since an 8 X 2 matrix multiplied by a 2 X 1 matrix results in an 8 X 1 matrix (as the matching middle dimensions drop out). The interpretation of the two values in the \(\beta\) matrix is that they are the values to be multipled by study time and 1 respectively to obtain the estimated grade for each individual. We can also view the linear model as a set of individual equations for each individual:

\(\hat{y}_1 = studyTime_1*\beta_1 + 1*\beta_2\)

\(\hat{y}_2 = studyTime_2*\beta_1 + 1*\beta_2\)

…

\(\hat{y}_8 = studyTime_8*\beta_1 + 1*\beta_2\)

Remember that our goal is to determine the best fitting values of \(\beta\) given the known values of \(X\) and \(Y\). A naive way to do this would be to solve for \(\beta\) using simple algebra – here we drop the error term \(E\) because it’s out of our control:

\[ \hat{\beta} = \frac{Y}{X} \]

The challenge here is that \(X\) and \(\beta\) are now matrices, not single numbers – but the rules of linear algebra tell us how to divide by a matrix, which is the same as multiplying by the inverse of the matrix (referred to as \(X^{-1}\)). We can do this in R:

# compute beta estimates using linear algebra

#create Y variable 8 x 1 matrix

Y <- as.matrix(df$grade)

#create X variable 8 x 2 matrix

X <- matrix(0, nrow = 8, ncol = 2)

#assign studyTime values to first column in X matrix

X[, 1] <- as.matrix(df$studyTime)

#assign constant of 1 to second column in X matrix

X[, 2] <- 1

# compute inverse of X using ginv()

# %*% is the R matrix multiplication operator

beta_hat <- ginv(X) %*% Y #multiple the inverse of X by Y

print(beta_hat)## [,1]

## [1,] 8.2

## [2,] 68.0Anyone who is interested in serious use of statistical methods is highly encouraged to invest some time in learning linear algebra, as it provides the basis for nearly all of the tools that are used in standard statistics.